PaulKraemer

Electrical

- Jan 13, 2012

- 155

Hi,

I have a 10 HP AC motor with nameplate 1750 RPM. This motor is being used for tension control in a winder application. The motor is controlled by a variable frequency drive that is configured for torque control mode. I can send the drive a 0-20 mA signal to vary the torque between 0-100%. I am having a hard time understanding what 0-100% relates to in actual torque. I believe the formula that relates torque, horsepower, and speed is torque = horsepower * 5252 / rpm. If I enter the nameplate information into this formula, I get:

torque = 10 * 5252 / 1750 = 30.01 lb-ft

I imagine (please correct me if I am wrong), that this would be the maximum torque that can be created by this motor at 1750 RPM. This makes me think that as I vary my control signal between 0-20 mA, the torque (at 1750 RPM) would vary accordingly between 0 – 30.01 lb-ft.

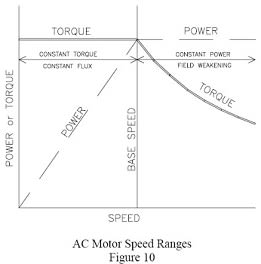

If my reasoning above is correct, I am not sure what the implications are of the fact that the motor will be running significantly slower than 1750 RPM during normal operation. Considering the equation Torque = HP * 5252 / rpm, it seems to me that if RPM decreases, either the torque or the horsepower must also change to preserve the accuracy of the equation. For a typical AC motor with a VFD in torque control mode, should I assume that the maximum torque (torque resulting from a 20 mA control signal) will be the same at all speeds (meaning HP decreases as speed decreases)? If this is not the case, the equation makes me think that if HP remains constant, then the maximum torque would have to increase as speed decreases.

If anyone here can clear this up for me, I would greatly appreciate it.

Thanks in advance,

Paul

I have a 10 HP AC motor with nameplate 1750 RPM. This motor is being used for tension control in a winder application. The motor is controlled by a variable frequency drive that is configured for torque control mode. I can send the drive a 0-20 mA signal to vary the torque between 0-100%. I am having a hard time understanding what 0-100% relates to in actual torque. I believe the formula that relates torque, horsepower, and speed is torque = horsepower * 5252 / rpm. If I enter the nameplate information into this formula, I get:

torque = 10 * 5252 / 1750 = 30.01 lb-ft

I imagine (please correct me if I am wrong), that this would be the maximum torque that can be created by this motor at 1750 RPM. This makes me think that as I vary my control signal between 0-20 mA, the torque (at 1750 RPM) would vary accordingly between 0 – 30.01 lb-ft.

If my reasoning above is correct, I am not sure what the implications are of the fact that the motor will be running significantly slower than 1750 RPM during normal operation. Considering the equation Torque = HP * 5252 / rpm, it seems to me that if RPM decreases, either the torque or the horsepower must also change to preserve the accuracy of the equation. For a typical AC motor with a VFD in torque control mode, should I assume that the maximum torque (torque resulting from a 20 mA control signal) will be the same at all speeds (meaning HP decreases as speed decreases)? If this is not the case, the equation makes me think that if HP remains constant, then the maximum torque would have to increase as speed decreases.

If anyone here can clear this up for me, I would greatly appreciate it.

Thanks in advance,

Paul